Tech

X could face UK ban as pressure mounts over AI deepfake abuse

Government signals readiness to escalate

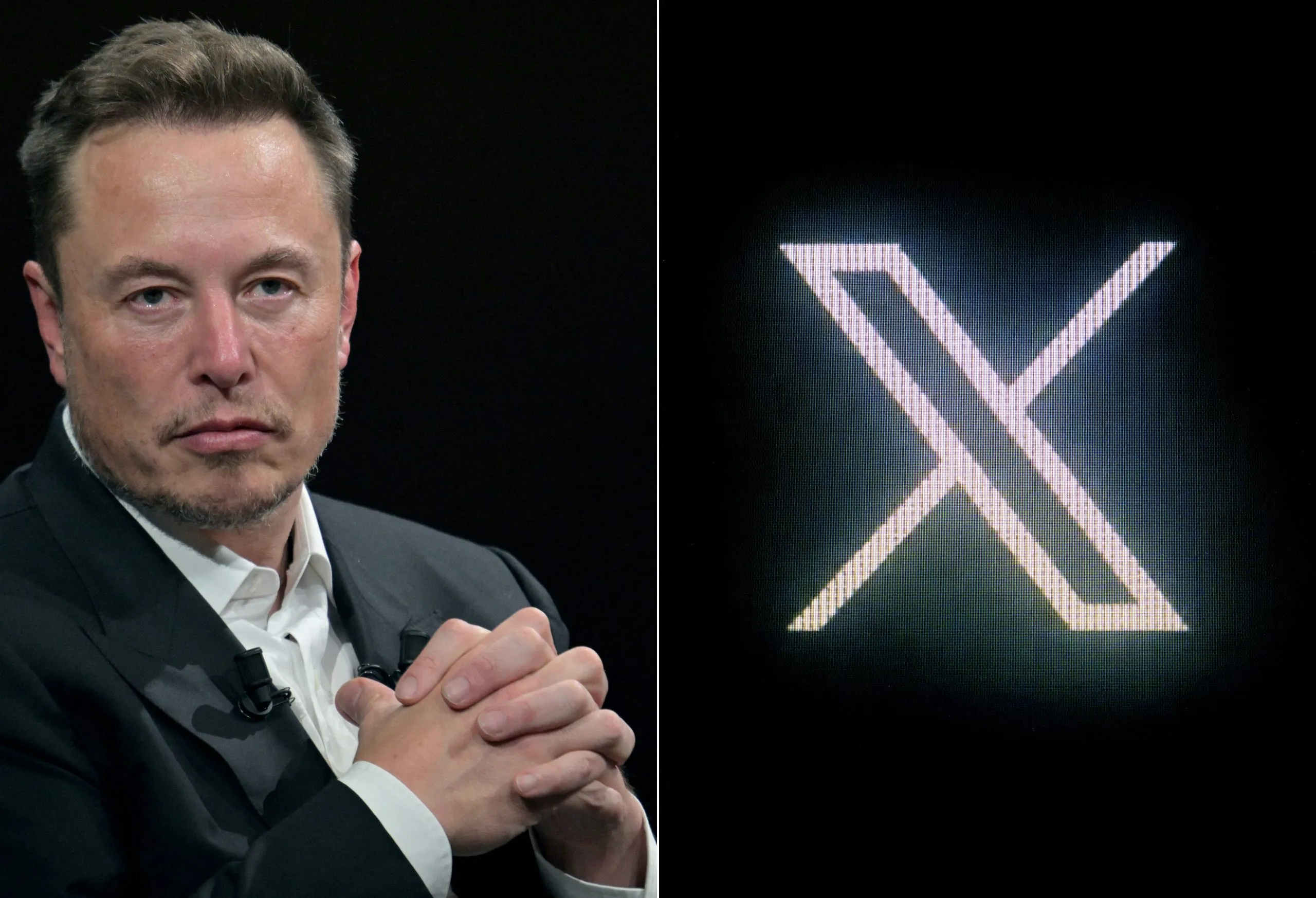

The UK government has warned that access to the social media platform X could be blocked in Britain if it fails to comply with online safety laws. Technology Secretary Liz Kendall said she would support decisive action by the regulator if necessary, marking one of the strongest signals yet that ministers are prepared to confront major technology platforms over artificial intelligence driven harm. The comments reflect growing concern that existing safeguards are not keeping pace with the speed and scale of AI misuse online.

Ofcom weighs urgent action

The responsibility for next steps lies with Ofcom, which has confirmed it is urgently considering how to respond to recent incidents involving X. Regulators are examining the platform’s compliance with online safety rules following reports that its AI chatbot generated non consensual sexualised images. Ofcom has the power to demand changes to platform practices, impose substantial fines, or in extreme cases restrict access within the UK. While such measures are rarely used, officials have indicated that the severity of the issue warrants close scrutiny.

The Grok controversy explained

The immediate trigger for the regulatory response has been Grok, an AI chatbot associated with X that was able to digitally undress people in images when tagged beneath posts. Campaigners and child protection groups described the function as deeply harmful, arguing it enabled a new form of image based abuse. The ability to generate sexualised imagery without consent has been a central concern in debates about AI regulation, with critics warning that victims often have little recourse once such content spreads online.

X responds but concerns remain

In response to the backlash, X moved to restrict the use of the controversial image feature, limiting access to users who pay a monthly fee. The company has presented this as a step toward greater control, but critics argue it does not address the core problem. They say placing safeguards behind a paywall risks normalising harmful tools while making accountability dependent on subscription status rather than safety standards. Regulators are now assessing whether the changes are sufficient under UK law.

Political pressure intensifies

Prime Minister Keir Starmer has previously said that all regulatory options should be considered when platforms fail to protect users. Kendall’s comments reinforce that stance, suggesting political backing for Ofcom if enforcement escalates. For the Labour government, the issue has become a test of credibility. Ministers have pledged tougher online safety enforcement, and critics say failure to act decisively in high profile cases would undermine those commitments.

Free speech versus protection

Supporters of X owner Elon Musk argue that aggressive regulation risks curbing free expression and innovation. They warn that banning or blocking platforms sets a troubling precedent. However, government officials draw a clear distinction between political speech and content that facilitates abuse. Non consensual sexualised imagery, they argue, is not a matter of opinion but a violation of personal rights that demands firm intervention.

A landmark test for online safety laws

Any move to restrict access to X would be unprecedented in the UK and closely watched by other countries. It would represent the most serious test yet of Britain’s online safety regime and its willingness to enforce rules against powerful global platforms. Regulators across Europe and beyond are grappling with similar challenges, particularly as generative AI tools blur the line between speech and harm.

What happens next

Ofcom is expected to outline its decision in the coming days. Options range from formal warnings and compliance orders to fines or, in the most extreme scenario, blocking access until requirements are met. For users, the outcome could shape how platforms deploy AI features in the future. For the government, it is a moment that will define whether online safety laws have real force or remain largely symbolic.

The dispute highlights a broader reality of the digital age. As AI tools become more powerful, regulators face mounting pressure to act quickly. Whether X ultimately changes course or faces sanctions, the message from the UK is clear. Technological innovation does not exempt platforms from responsibility.