Politics

Parliament Debates New AI Safety Bill

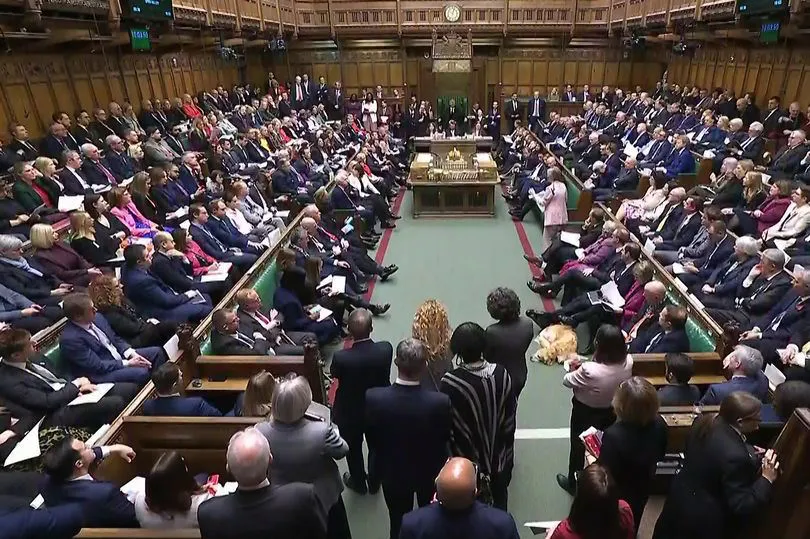

The United Kingdom’s House of Commons opened a new session this week centered on one of the most anticipated policy debates of the year, the Artificial Intelligence Safety Bill. Lawmakers from across party lines are weighing the balance between innovation, national security, and ethical responsibility as artificial intelligence continues to influence key sectors from healthcare to defense.

The bill seeks to establish a comprehensive regulatory framework for AI development, mandating transparency standards, data-use audits, and certification procedures for companies deploying large-scale models. It reflects growing concern among policymakers that the absence of structured oversight could expose the economy to systemic risks while undermining public confidence in digital transformation.

Balancing Innovation and Regulation

During the opening debate, the Prime Minister described the legislation as a “forward-looking safeguard” intended to strengthen trust in the nation’s technology ecosystem. Proponents argue that a clear regulatory architecture will attract responsible investment and prevent misuse of AI systems in areas such as surveillance, misinformation, and autonomous decision-making.

However, several Members of Parliament cautioned against over-regulation that could stifle Britain’s innovation leadership. They urged for proportionate rules that recognize the differences between consumer applications, enterprise models, and open-source research projects. The challenge for Parliament lies in designing a framework that supports both ethical governance and commercial agility.

Industry associations including TechUK and AI Council UK submitted policy briefs emphasizing that innovation flourishes where regulation is predictable. They have recommended the creation of a national testing sandbox that allows startups to experiment with emerging models under government-supervised ethical standards.

The Role of Public Trust and Data Governance

At the heart of the bill is the question of public trust. Policymakers are responding to concerns over data misuse, algorithmic bias, and the lack of accountability when AI decisions affect employment or access to public services. The bill introduces mandatory explainability standards for AI deployed in sensitive areas such as credit scoring, recruitment, and law enforcement.

Under the proposal, companies will be required to publish annual transparency reports outlining data sources, decision metrics, and risk-mitigation steps. The Information Commissioner’s Office and Digital Markets Unit would gain expanded powers to audit AI systems and impose penalties for non-compliance.

Supporters believe these measures will align Britain’s AI regulation with evolving European and OECD norms, while maintaining flexibility for domestic innovation.

Industry Response and Economic Implications

Reaction from the private sector has been largely supportive, with major AI firms welcoming the government’s consultative approach. Executives from leading research laboratories and fintech platforms testified before Parliament, noting that standardized safety requirements will enhance global investor confidence.

Some companies, however, have raised questions about implementation costs. Smaller developers argue that compliance mechanisms may favor large corporations capable of absorbing regulatory expenses. To address this, the bill includes financial-assistance provisions for small and medium-sized enterprises working on high-impact research aligned with national priorities.

Economists suggest that a balanced AI Safety Bill could strengthen London’s reputation as a trusted innovation hub. By embedding ethics and oversight into the innovation cycle, the UK may attract both foreign investment and international partnerships seeking reliable governance models.

International Cooperation and Strategic Outlook

The debate also carries significant geopolitical weight. The United Kingdom has been positioning itself as a bridge between the United States and the European Union on digital-ethics policy. Cooperation with global partners on AI safety is viewed as a cornerstone of Britain’s foreign-technology strategy.

Recent statements from the Department for Science and Technology indicate plans to work with the OECD and G7 Digital Ministers to harmonize safety standards and share risk-assessment frameworks. Analysts note that aligning with international partners will help prevent regulatory fragmentation and ensure British companies can compete globally.

Lawmakers are expected to complete the committee review of the bill before the end of the quarter, after which it will move to the House of Lords for further scrutiny. If passed, the AI Safety Bill would mark one of the most comprehensive legislative efforts to govern artificial intelligence anywhere in the world.

A Defining Moment for Britain’s Digital Future

For policymakers, the debate represents more than a legislative milestone. It signals a broader national effort to define how technology should serve society rather than dominate it. The success of the bill will depend on the government’s ability to implement flexible yet firm oversight, balancing rapid innovation with moral responsibility.

As the session continues, all eyes remain on Westminster. The outcome will determine whether the United Kingdom can emerge as a global standard-setter in ethical AI governance while preserving its long-standing reputation as Europe’s innovation capital.