Business

Are AI Prompts Undermining Our Thinking Skills

Artificial intelligence tools have become part of everyday problem solving. From drafting emails and structuring essays to analysing data and preparing job applications, millions of people now rely on AI prompts to speed up tasks that once required sustained mental effort. While the convenience is undeniable, a growing number of researchers are asking an uncomfortable question. Could frequent reliance on generative AI be weakening our ability to think critically?

The concern is not about artificial intelligence replacing human intelligence outright, but about how habitual outsourcing of cognitive tasks might change the way the brain works. When an AI system provides instant structure, summaries or solutions, the human user may engage less deeply with the problem itself. Over time, some experts fear this could dull core skills such as reasoning, creativity and problem solving.

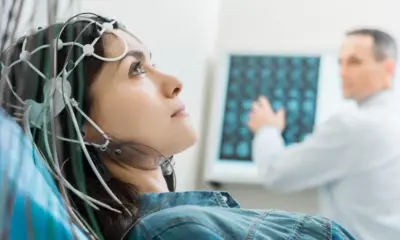

Recent research has added weight to that debate. Earlier this year, scientists at Massachusetts Institute of Technology published a study examining how the brain behaves when people use generative AI to complete writing tasks. Using electroencephalography to track brain activity, researchers compared participants who wrote essays with the help of ChatGPT to those who worked unaided.

The findings were striking. Participants who relied on the AI showed reduced activity in brain networks associated with higher level cognitive processing. These networks are typically involved in organising ideas, evaluating arguments and maintaining focus. In contrast, those who wrote without AI assistance displayed stronger engagement across these regions.

Researchers were careful to stress that the study does not prove AI use permanently damages the brain. Instead, it suggests that when cognitive work is outsourced, the brain may simply do less work in that moment. The question is whether repeated use creates a habit of reduced engagement, particularly when AI tools are used for tasks that would otherwise stretch mental capacity.

This concern extends beyond academic writing. AI is increasingly used to generate business strategies, interpret data, create presentations and even make decisions. When systems offer polished outputs instantly, users may skip the slower process of thinking through alternatives, questioning assumptions or exploring nuance.

Cognitive scientists have long warned about similar effects with other technologies. Calculators, GPS navigation and spell checkers all reduce the need for certain mental skills. However, those tools typically replaced narrow functions. Generative AI operates at a higher level, assisting with reasoning, synthesis and judgement, functions once considered uniquely human.

That distinction matters. Writing an essay or analysing information is not just about producing an answer. It is about the thinking that happens along the way. Struggling with ideas, revising arguments and identifying gaps all contribute to learning. If AI removes that friction, the user may still get a result, but with less mental development.

Education experts are particularly concerned about younger users. Students who rely heavily on AI prompts may complete assignments efficiently while missing opportunities to build foundational skills. Some educators worry this could create a gap between apparent performance and underlying understanding, especially if AI use becomes routine rather than strategic.

At the same time, many researchers caution against alarmism. AI tools can also enhance thinking when used thoughtfully. For example, prompting an AI to challenge an argument, suggest counterpoints or explain a concept in multiple ways can stimulate deeper engagement. The key factor appears to be how the tool is used, not simply whether it is used.

There is also evidence that AI can reduce cognitive load in beneficial ways. By handling repetitive or mechanical tasks, AI may free up mental resources for higher level thinking. In complex fields such as science, engineering or medicine, this could support better decision making rather than replace it.

The risk arises when AI becomes a default solution rather than a support tool. If users consistently defer judgement to an algorithm, they may lose confidence in their own reasoning. Over time, that could weaken skills that rely on practice, such as evaluating evidence or constructing original ideas.

Technology companies are aware of these concerns, though responses remain limited. Most AI systems are designed to maximise speed and helpfulness, not to encourage struggle or reflection. Some experts argue that future tools should be built with cognitive development in mind, prompting users to think rather than simply delivering answers.

For individuals, the challenge is developing healthy habits. Experts suggest treating AI like a collaborator rather than a replacement. Attempting tasks independently first, using AI to refine rather than generate ideas, and questioning outputs critically can help maintain mental engagement.

The MIT study adds an important data point to an evolving discussion. It does not suggest abandoning AI, but it does highlight the need for awareness. Tools that save time can also change how we think, often in subtle ways.

As generative AI becomes embedded in daily life, society faces a broader question. Do we value efficiency above all else, or do we preserve spaces for slow, effortful thinking? The answer may determine not just how we work, but how we learn, reason and create in an age of intelligent machines.