Tech

One in Three in the UK Now Turn to AI for Emotional Support and Conversation

Artificial intelligence is increasingly being used not just as a productivity tool, but as a source of emotional support and social interaction, according to new research published by a UK government body. The findings highlight a significant shift in how people relate to technology and raise new questions about the role AI is beginning to play in everyday life.

The study found that around one in three adults in the UK have used AI systems for emotional support or conversation. More strikingly, one in 25 people said they turn to AI for support or companionship every day. The data comes from the first report published by the AI Security Institute, which has been examining the capabilities and risks of advanced artificial intelligence.

The report is based on two years of testing more than 30 advanced AI systems. While the systems were not named, researchers assessed their performance across areas considered critical to public safety, including cybersecurity, chemistry and biology. Alongside these technical evaluations, the institute also examined how people interact with AI in real world settings, revealing the growing emotional role these systems are playing.

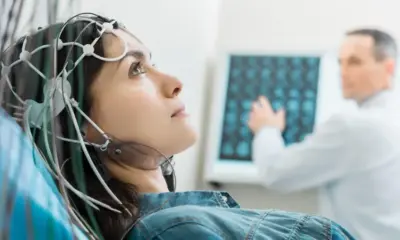

Experts say the findings reflect broader changes in society. As AI powered chatbots become more conversational and responsive, they increasingly resemble companions rather than tools. For some users, AI offers a non judgmental space to talk through problems, practise conversations or alleviate loneliness. The technology is always available, does not tire and responds instantly, qualities that can be appealing in moments of stress or isolation.

The rise in emotional AI use comes against a backdrop of growing concerns about loneliness and mental health. Surveys have shown increasing levels of social isolation across age groups, particularly among younger adults and older people living alone. In that context, AI systems can fill gaps left by limited access to human support, whether through stretched healthcare services or fragmented social networks.

However, the trend has prompted unease among researchers and policymakers. While AI can provide comfort or distraction, it is not a substitute for human connection or professional mental health care. There are concerns that reliance on AI for emotional support could discourage people from seeking help from friends, family or trained professionals, particularly if the technology appears empathetic without truly understanding human experience.

The UK government said the AI Security Institute’s work would inform future policy and regulation. Officials said the testing programme aims to help companies identify and address risks before AI systems are deployed at scale. The goal, they said, is to encourage responsible development while maintaining the UK’s position as a leader in AI innovation.

One issue highlighted by experts is transparency. Many users may not fully understand the limitations of AI systems or how their data is being used. Conversations that feel private or supportive may still be processed, stored or analysed, raising questions about privacy and consent. There are also concerns about the potential for AI systems to reinforce harmful thinking if not carefully designed and monitored.

The report does not suggest that AI use for conversation is inherently harmful. In some cases, it may provide short term support or help people articulate feelings they struggle to express elsewhere. Mental health charities have noted that AI tools can sometimes act as a stepping stone, encouraging users to seek further help.

Nevertheless, researchers stress the importance of boundaries. AI systems do not possess empathy, moral judgement or accountability. They generate responses based on patterns in data, not lived experience. Treating them as emotional substitutes rather than tools could blur lines that are important for wellbeing.

The findings also raise questions for developers. As companies compete to build more engaging and human like AI, they must consider the ethical implications of emotional dependency. Designing systems that are supportive without encouraging reliance will be a key challenge.

For policymakers, the data underscores the need for updated safeguards. Existing regulations were largely written before AI systems became conversational companions. As usage patterns change, oversight frameworks may need to evolve to address emotional and psychological risks alongside technical ones.

The AI Security Institute said its future work will continue to assess how people interact with advanced AI, particularly as capabilities improve. The institute aims to provide early warnings about emerging risks, allowing regulators and companies to respond proactively rather than reactively.

As AI becomes more embedded in daily life, its role is expanding beyond work and entertainment into deeply personal spaces. The fact that millions of people now turn to machines for conversation and comfort marks a significant cultural shift.

Whether this trend ultimately helps or harms society will depend on how AI is designed, regulated and understood. For now, the findings serve as a reminder that artificial intelligence is no longer just changing how we work, but how we relate, cope and connect.